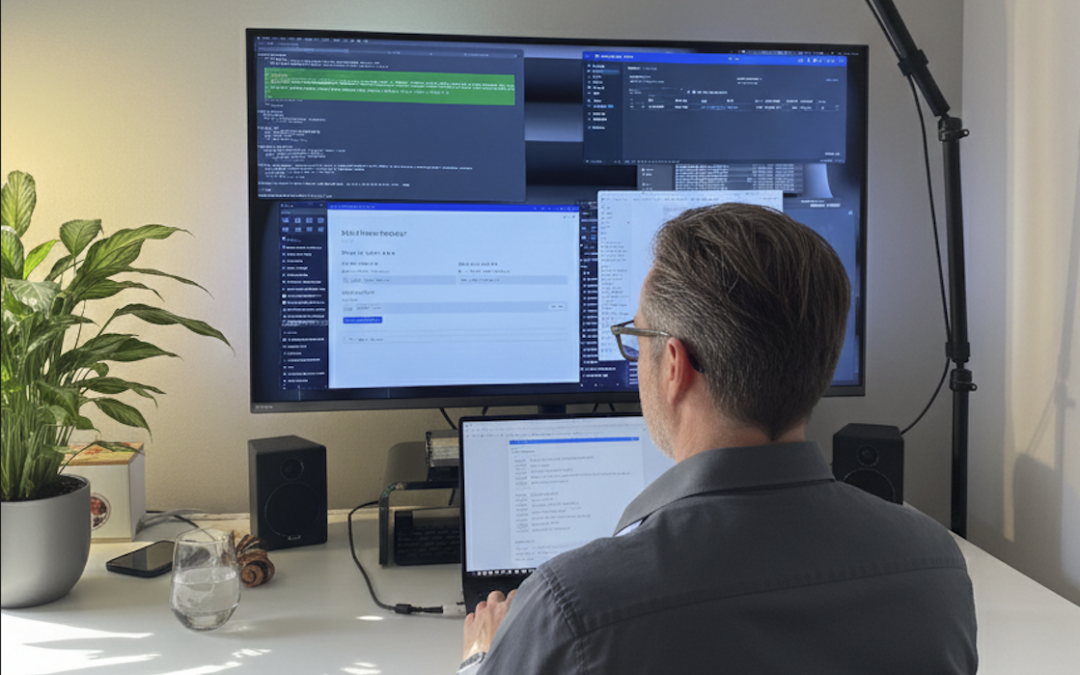

A field report from Michael - requirements engineer, former developer, and now a bit of a tech lead again.

The result in advance

At the weekend I built a complete application. As an MVP. With a front end, clean architecture, logging mechanisms, a user-friendly GUI and the possibility to extend the whole thing cleanly.

Effective working time: 16 hours.

Would I have done this as a traditional developer? 3-4 weeks minimum. With the developer rule of thumb «take twice as long» rather 6 weeks. That's about 252 hours.

That's not 10x. This is 15x.

And no, I haven't written a single line of code myself.

Who am I - and why is that relevant?

I often introduce myself like this: I'm originally a developer, but I still like talking to people.

My wealth of experience includes software development, the technical management of international dev teams and the role of product owner. I know the challenges of leading development teams and still have a great curiosity for technical details.

But: I've since moved further away from code. My focus is on understanding customer needs, the WHY behind requirements and communicating with developers. My work with code is mostly limited to automation, low-code platforms or small scripts.

With my trainer colleague and friend Matthias, I developed the AI Developer Bootcamp developed. My role here: concept, didactics, structure. I also experiment with AI dev approaches such as Lovable, Bolt, Windsurf or Roocode - but that's just it: Experimenting.

So far.

10x productivity - hype or reality?

Before I get into my experiment, a quick look at the figures we know from our boot camp:

We have over 150 boot camp participants evaluated. The result: On average 82% faster for typical development tasks with AI support.

That's impressive - but these are controlled exercises. What happens in the real world, in a real project with real constraints?

That's exactly what I wanted to find out.

The trigger: quality assurance for our new module

As part of the quality assurance for a new module in our bootcamp on agentic coding, I wanted to run through our current use case myself. With Claude Code.

To be honest, I've wanted to do this for a long time. It's sometimes a real challenge to keep up with the speed of AI development and deal with every new topic.

What I love most is when experimenting with new technologies coincides with solving a specific problem.

And that's exactly what happened.

The problem: AI is reaching its limits

I am currently working on a solution to a customer problem. In the last few weeks I have been working on Long dock with agents - with custom instructions, referencing knowledge in the form of markdown files and knowledge via RAG.

The problem: The use case could not be solved with assistants. Even Claude 4.6 Reasoning - currently the best model for our problem - reached its limits.

A single run cost over API 1 USD. These are incredible costs for AI. And it's slow and not really reliable.

The turning point: A new design paradigm

I love testing the limits of technology. You learn an incredible amount in the process.

And then came the thought: Just because we have AI doesn't mean it has to be the universal super solution everywhere.

New design: Instead of making everything fluid with AI, we build a smart logic - with the help of AI, but with deterministic rules. A combination of code and AI.

That was the turning point.

Claude Code + Agent Harness = Structured Agent Management

So: Claude Code installed, our self-developed Agent Harness on - and off you go.

What is the Agent Harness?

In short, a structured framework that guides AI agents instead of simply letting them go. 90% of AI agents fail because they work without clear guidance. The Agent Harness solves exactly this problem.

By the way, it is open source and GitHub available. How to really use them in everyday life - that's what our bootcamp is for.

My setup:

- I'm working with Claude 4.6 Reasoning again - but this time Claude doesn't reach its limits

- I don't write a line of code

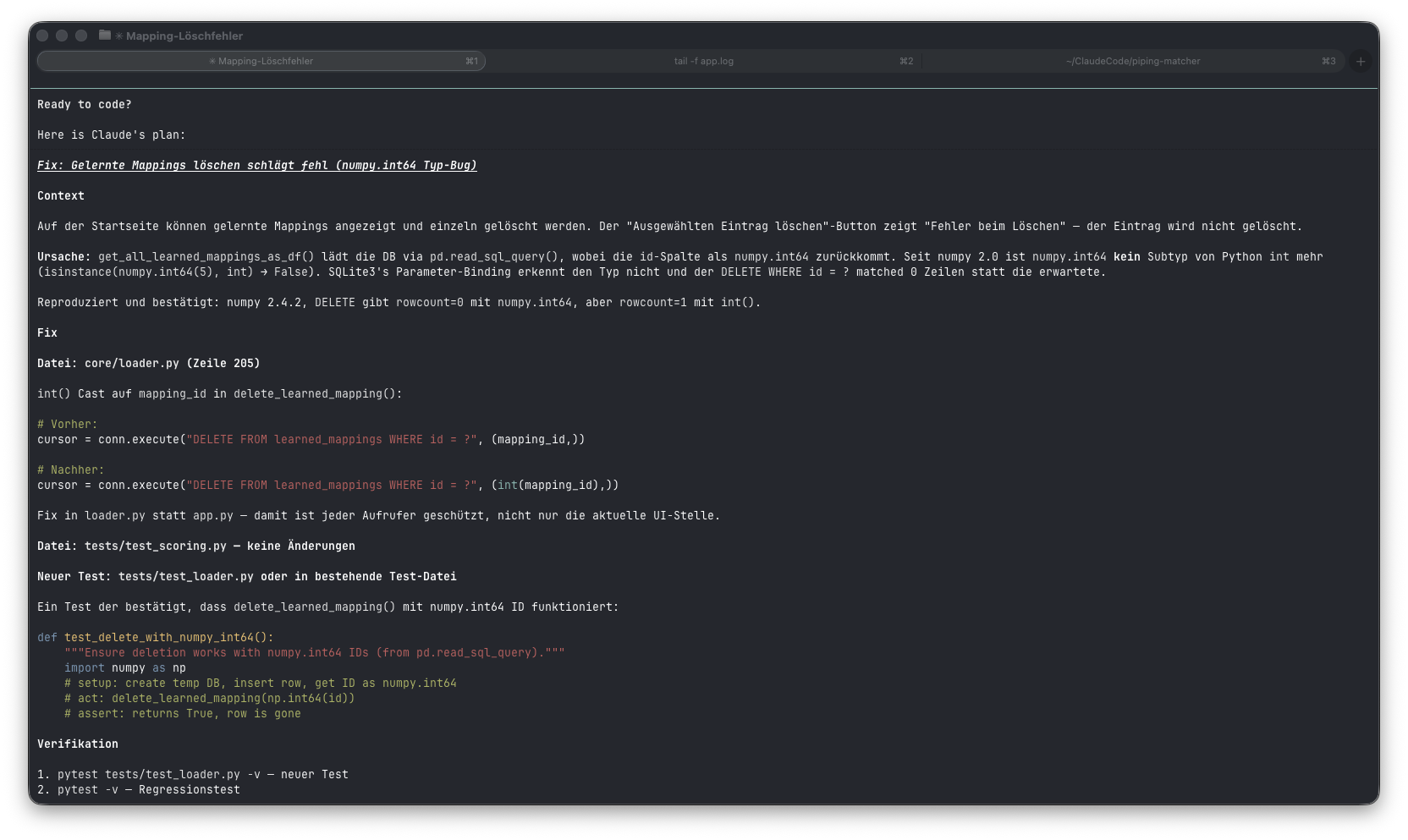

- Claude plans his steps first, we discuss them together, and only then do we get started

- He asks questions and I can make adjustments

Claude Code has drawn up a plan on how it intends to implement my requirements:

The most important principles

Claude knows exactly what we want to do:

- The Techstack

- The Coding Style

- Our Change Management Approach

– Security Built In

– Test-Driven Development

- And much more - all documented in CLAUDE.md and separate Markdown files

And here comes the crucial point:

I know the requirements and the underlying problem very well.

- We spoke at length with the customer

- Data analyzed

- Developed a deep understanding of the customer's problem

- Open points clarified in interviews

I had already documented these requirements and concepts in detail from my work with the Langdock agents - in custom instructions and separate .md files.

Perfect for the next LLM.

My workflow: Natural language instead of typing

Now I can communicate the requirements to the AI in a structured and step-by-step manner.

And the kicker: I'm very fast on the keyboard. But I never come close to the speed I would if I just *told* a developer.

The solution: I dictate.

Then briefly check whether everything has been recognized correctly (this works surprisingly well, even with voices or music in the background) - and continue.

I take on the role of technical director again. Only this time I'm talking to the AI. And I don't have to wait days for results, but minutes.

Time to reflect for a moment. Time to think. And for a coffee.

Then it goes on.

The result

After 2 days I have:

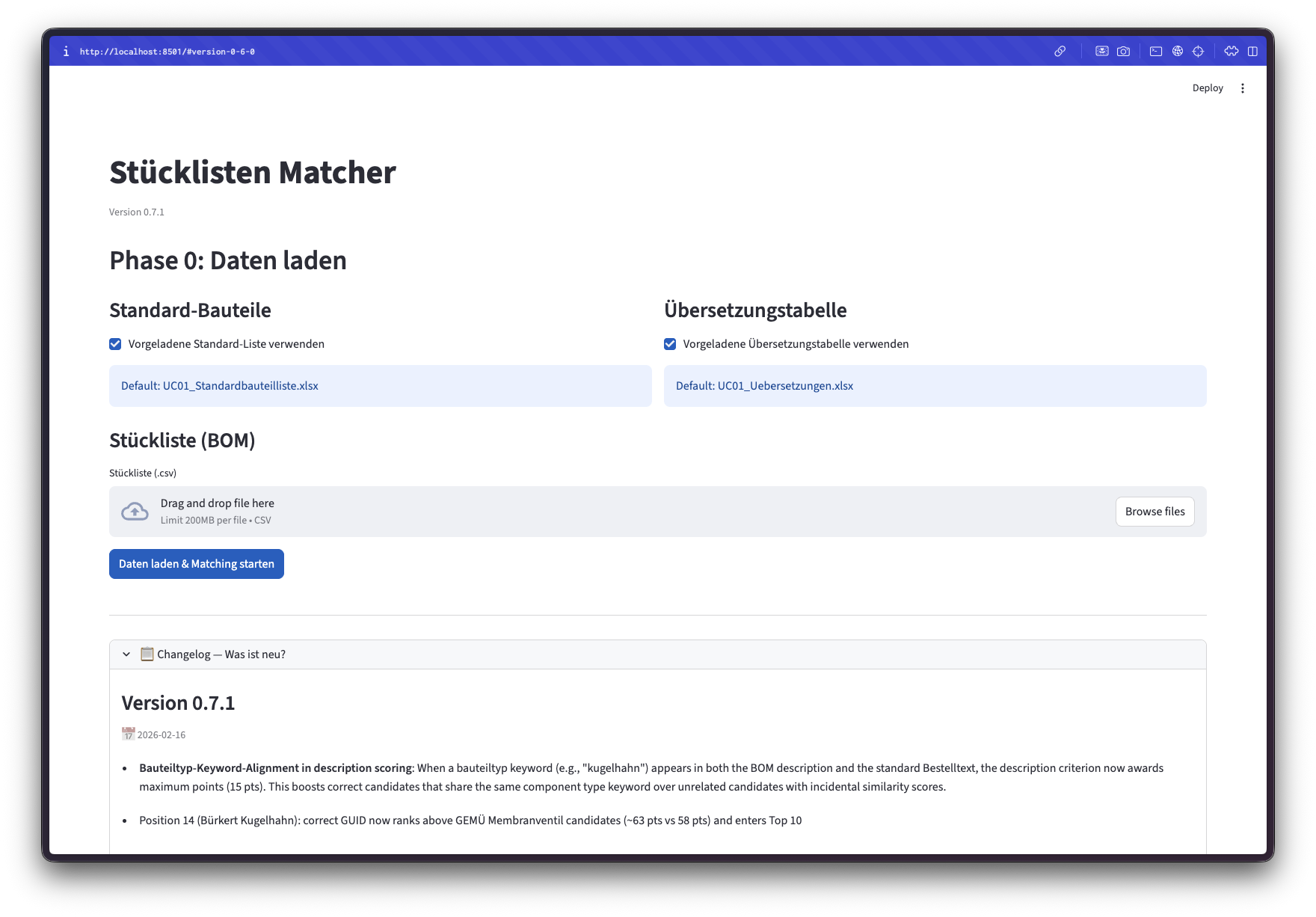

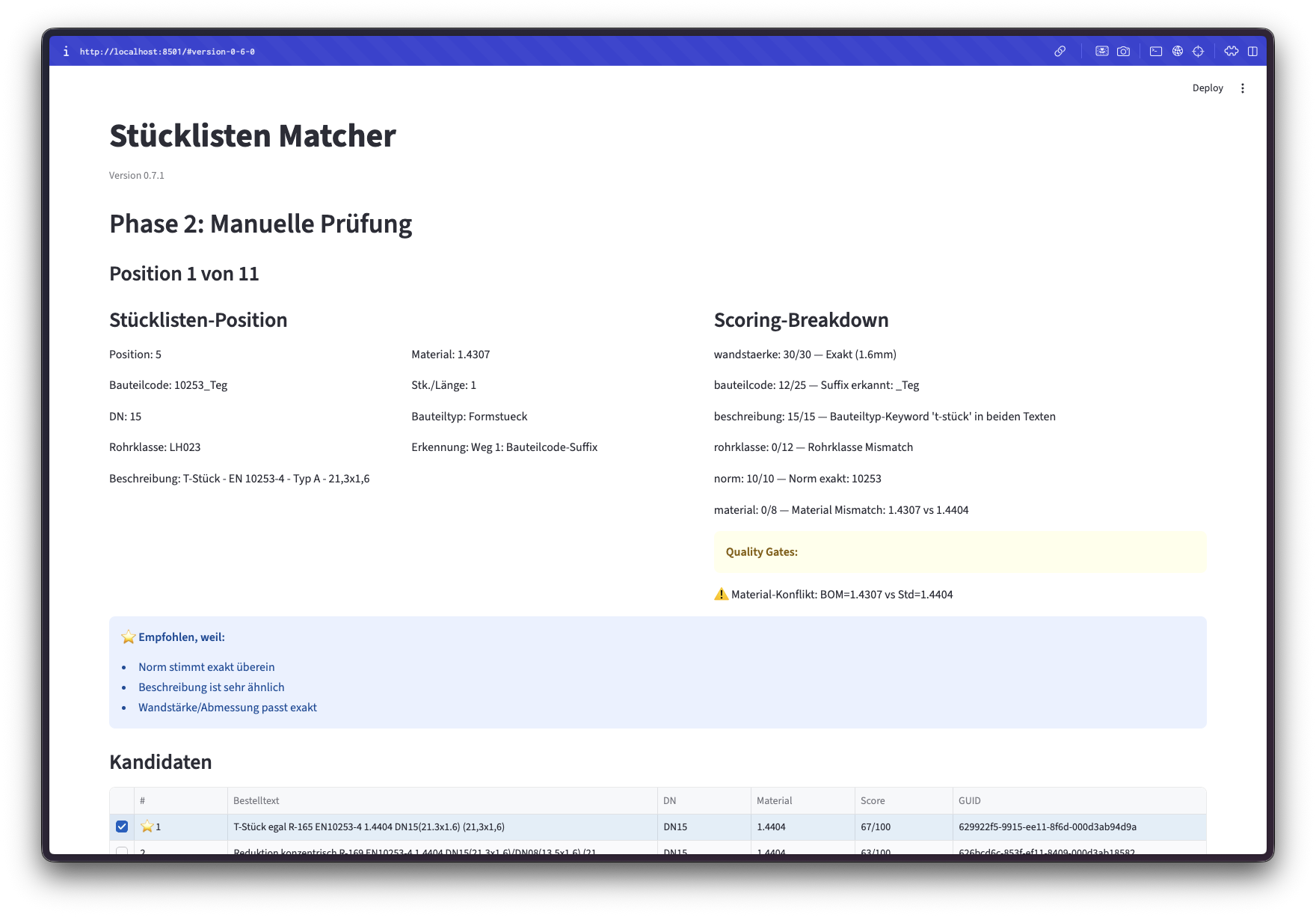

✅ A complete application with front end

✅ Clean MVP architecture

Logging mechanisms

User-friendly GUI

✅ The option to extend the clean

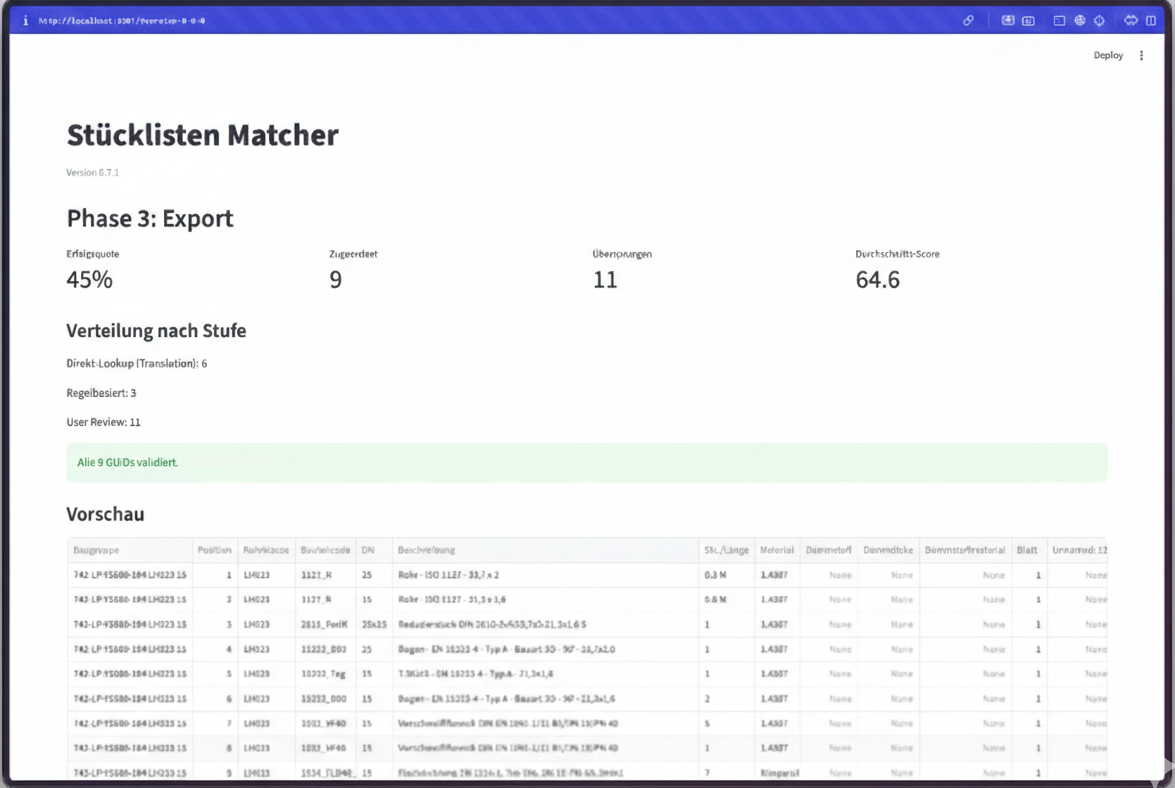

Screenshots from my application:

Effective working time: approx. 16 hours (2 working days)

When I think about how long it would have taken me as a traditional developer:

- 3-4 full working weeks minimum

- I would probably have run into bugs and problems in the meantime

- With the rule of thumb «take twice» the estimate of a developer: 6 weeks

- These are about 252 hours

Pure developer activity: 10x is the lower limit.

For me it was more 15x.

What this does NOT replace

Communication with people is no substitute for this.

I invested a lot of work in analyzing the customer problem. The deep understanding of the WHY, the stakeholder interviews, the data analysis - that is still manual work. Human work.

But this is where the issues come in Requirements engineering and development in the product management lifecycle.

And there are also many ways to work more efficiently during the analysis phase - see AI in requirements engineering.

The best for last

I really enjoy developing it further.

I'm a bit of a tech lead again. Just in a different way. I discuss with an AI, I review code, I control the architecture. Without writing a single line myself.

This is the change of perspective that we teach in our boot camp.

For whom is this relevant?

Not just for developers.

If you come from requirements engineering, are a business analyst or product owner - and have a technical background - then this is for you. AI Developer Bootcamp a real change of perspective.

You don't just learn Claude Code, but many different approaches:

- Agentic coding with various tools

- The Agent Harness Methodology (Open Source!)

- How to integrate AI into your workflow in a meaningful way

- What works - and what doesn't

The next boot camp starts on March 3.

Are you in?

👉 To the AI Developer Bootcamp

FAQ

Do I need developer experience for Claude Code?

A basic technical understanding helps enormously. You don't have to be able to code yourself, but you do need to understand what code does. That's exactly what we train in the bootcamp.

How much does Claude Code cost?

Claude Code runs via the Anthropic API. The costs depend on the model and usage. For my 2-day project, the API costs were around 15-20 USD - significantly cheaper than the previous approach of 1 USD per single run.

What is the Agent Harness?

A structured framework for managing AI agents. Open source available, but the real application is best learned in practice - that's what our bootcamp is for.

Does this also work for complex enterprise projects?

Yes, but with restrictions. The principles scale, but you need clear structures. That's the difference between «trying out AI» and «using AI systematically».

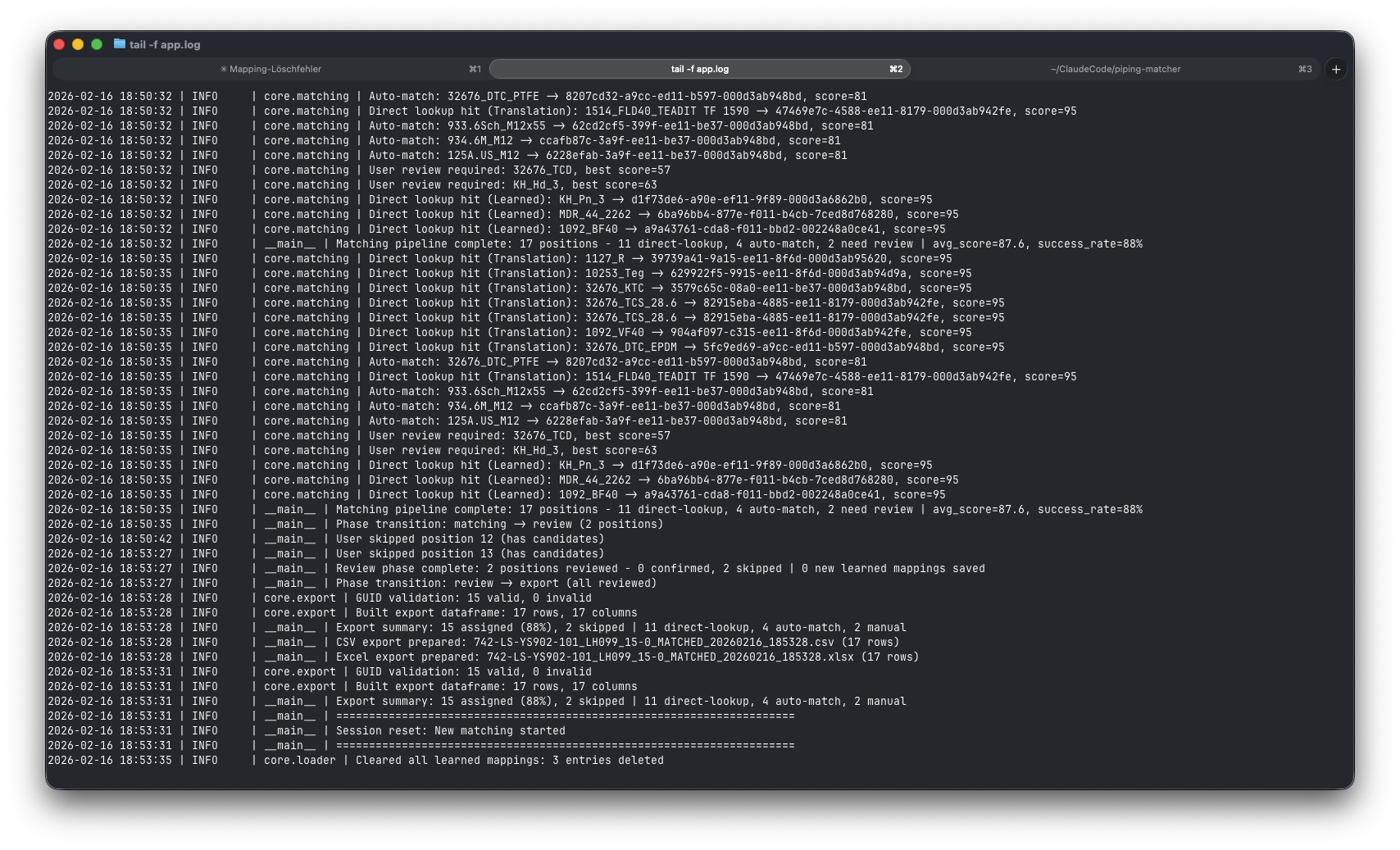

I can analyze errors together with Claude Code in the application's log file:

Michael is a requirements engineering expert, former software developer and trainer at Obvious Works. Together with Matthias, he runs the AI Developer Bootcamp.

Michael (Mr. Miroboard) Mey

Michael is a trainer who not only impresses with his knowledge, but also with his passion.

About Michaels Trainer profile

To his LinkedIn profile